Requirements

In order to follow this tutorial, make sure you have Docker installed and working on your development computer (verify it by running $ docker -v in your command line).

You don’t need to have any prior Docker experience as I will try to explain things from the ground up. If you have any questions, don’t hesitate to leave a comment below.

Last but not least a word of caution; while Docker is supported natively on Linux, it requires an additional layer to work on Windows and Mac (which, if you installed it, you already know). This problem slightly undermines the advantages of using Docker now, but since I have access to the beta (and you can too! Apply here) I can assure you these issues will go away soon. The issue is that you still need to have a virtual machine running, but the good part is that it’s only to run a tiny wrapper Linux distribution, called boot2docker.

So what is docker?

In order to understand what Docker is, let’s look at a couple of problems we (and by we I mean WordPress developers) face on almost a daily basis.

We’ve all been in situations where we needed to share our code with another developer (or many of them). Once we committed our code to GitHub (you do use, Git, right?) and they tried to run it, it didn’t work. When asked what the problem is we all replied with this familiar phrase: it works on my computer.

When two developers are working on the same project this doesn’t seem like a big issue – they sit down together (or virtually), resolve the issue and hopefully document it. But what happens when there’s 20 developers working on the same project? Or 50? Things can get out of hand quickly.

Same goes with production. How many times did you write some code, tested it locally, but when deployed to production you faced the white screen of death? Now imagine a team of 20 developers deploying to the same production environment. Insane amounts of time are wasted on devops rather than on development. Which translates to insane amounts of money, because someone has to pay for the time spent on these issues.

Let’s take a step back and look at some possible culprits that cause these issues:

– different WordPress (and/or plugin) versions

– different PHP versions

– different webserver (Apache/Nginx) versions

– different MySQL versions

– different operating system altogether

– different configurations of all the above (memory/CPU limits, installed modules)

So what is that Docker brings to the table? One thing: consistency. It guarantees the environment will be identical no matter where you run it; Locally, on 50 different computers, in a CI (continuous integration) environment or in production – it will work exactly the same, because it will use the same environment and code.

These problems are of course not new and there’s been an alternative that tried at least to some extent to mitigate the issues of inconsistency: Vagrant. Much like Docker, it provides a recipe for building consistent environments, which comes at a price: It requires you to run at least one virtual machine – which unlike Docker, is a full-fledged Linux distribution, with problems I’ll try to outline throughout this tutorial.

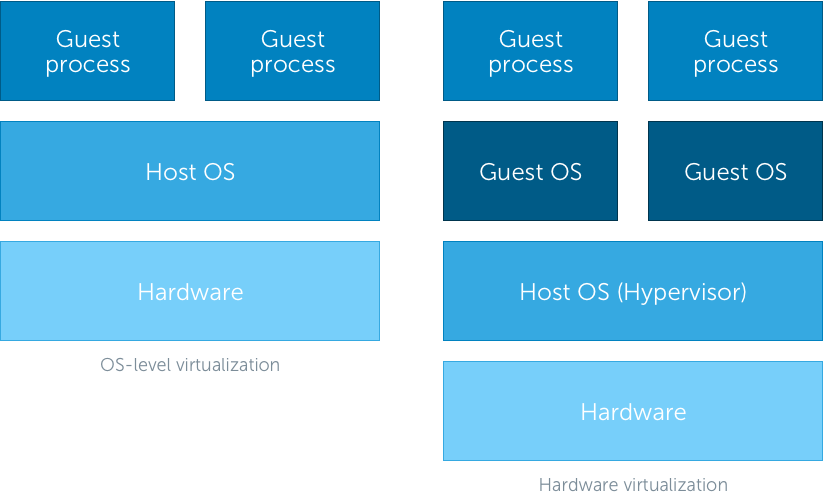

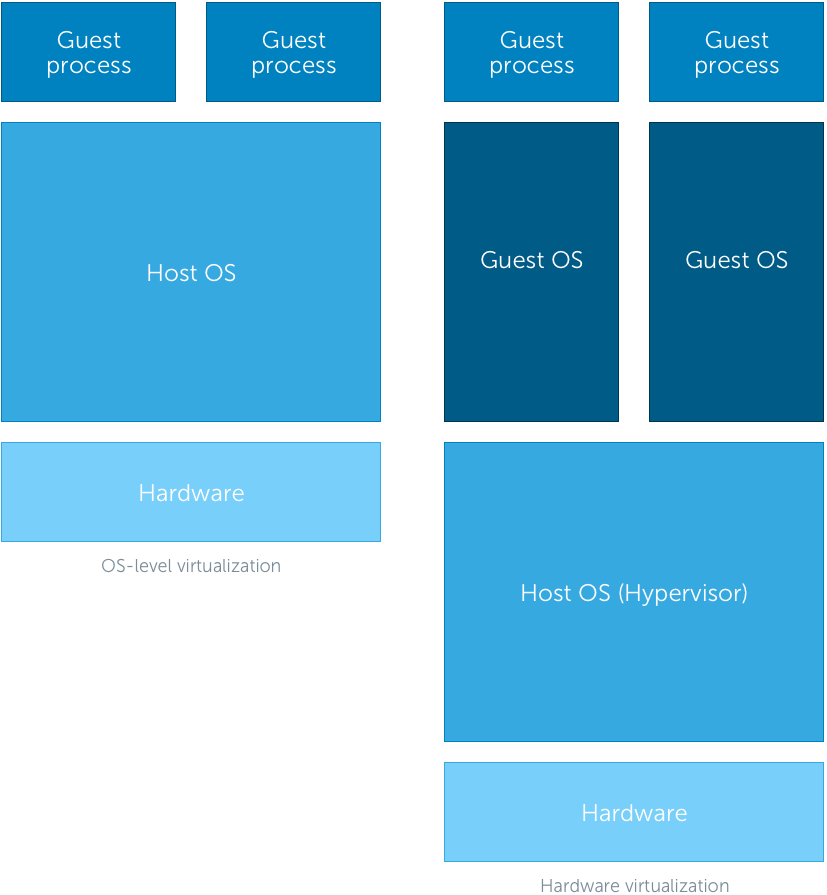

To illustrate the first of these problems, many Docker tutorials show this diagram:

At first glance, it doesn’t seem like a huge difference, so let me show you a more accurate diagram which takes system resources into account:

That extra layer doesn’t look that innocent anymore does it? And in fact, it is not – the virtual/guest operating system needs a lot of locked resources for it to run and you’re bound to quickly run out of them if you don’t carefully assign a proper amount to each guest OS. And by locked, I mean once you set the amount of CPU, RAM and Hard drive space to the guest OS, the host can’t use those, regardless whether the guest uses a big portion of it or only small – these resources are not shared.

By now you might be thinking that Docker is some kind of a trimmed virtual machine and you’re half right; It is virtual alright, however not a machine, but rather an environment. Where a virtual machine thinks it’s a physical machine (through a virtualization software such as VirtualBox or VMWare Fusion), a Docker Container (more on the terminology soon, think of it as a trimmed Linux VM) relies on the host OS for any resources it needs and only includes the bare minimum it needs to run a Linux environment – sometimes as little as 5 megabytes of disk space and significantly less memory than traditional VMs.

In order to understand the second biggest benefit that Docker brings to the table, let’s look at development for a bit (Not PHP or WP, just development in general). There are quite a few development principles out there that – if practiced – make developers’ lives significantly easier, and there is a set of them called SOLID.

The very first principle (single responsibility principle) is Docker’s second biggest benefit: Each Docker Image is only responsible for doing one thing and one thing only. Do You need a webserver? Take the Nginx image. Need a PHP interpreter? There’s an image for that. MySQL? Check.

Indeed, what we end up are usually several Docker containers that are orchestrated by some higher level software, such as Docker itself, Docker Swarm or Kubernetes so that they properly work together. We will cover Kubernetes in depth in the third post of this series.

It is certainly possible to build one single image that includes all these responsibilities, but I’ll do my best to explain why you should avoid that.

Our first image

Now that you have a basic understanding of what Docker is, let’s try to build a basic LEMP stack (Linux, Nginx, MySQL, PHP), which we will use for our WordPress website going forward.

Since all docker containers are based on Linux, there’s no need to build it specifically, so let’s instead focus on Nginx as our fist part. Create a new project directory and in it, create a file called index.html and put the following HTML snippet in:

<h1>Hello world from Docker!</h1>

Then run this command from the same directory – assuming you’ve installed Docker successfully:

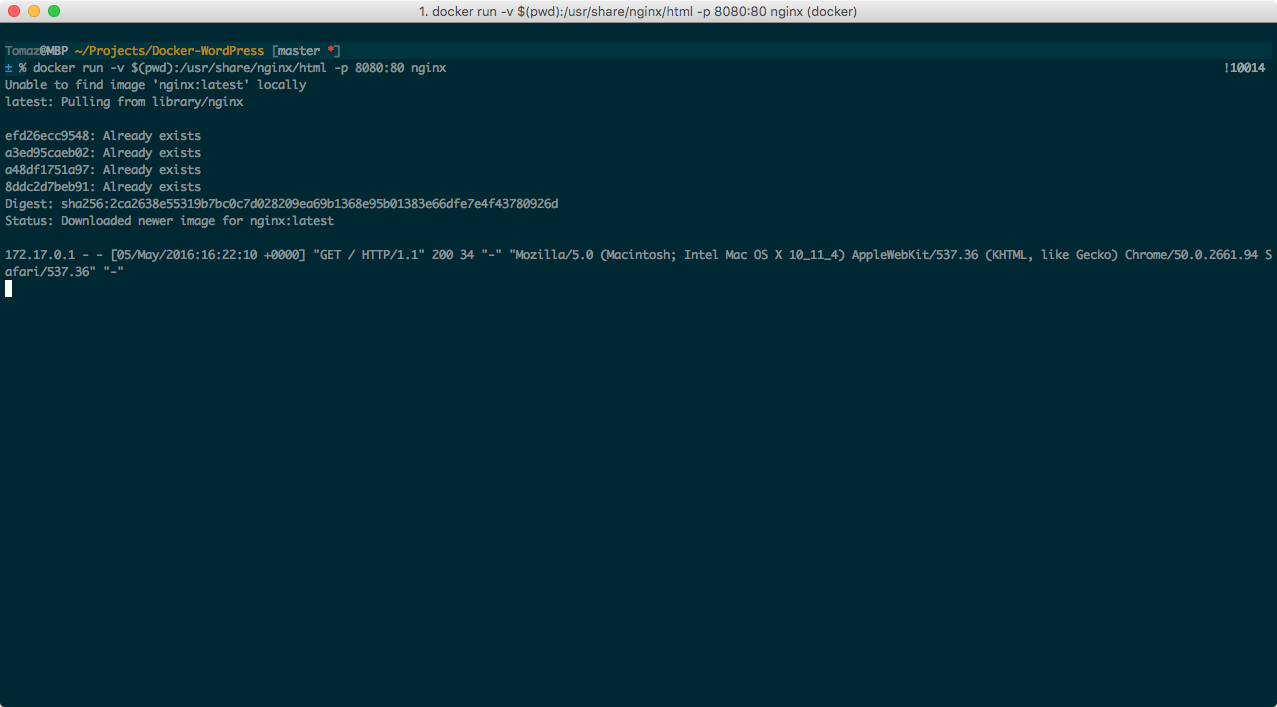

$ docker run -v $(pwd):/usr/share/nginx/html -p 8080:80 nginx

(If you get this error: docker: Cannot connect to the Docker daemon. it means you haven’t loaded the docker machine settings into the shell, run $ eval "$(docker-machine env default)")

Since you most likely don’t have the Nginx image locally, Docker will download it from the official repository and run it with the volume and port parameters you entered. It will not give you any indication of whether it’s running or not (at first, it just seems it hung):

So open your browser and navigate to http://localhost:8080 (or, if you use docker-machine, which is more likely, swap localhost for your docker-machine’s IP) and lo and behold, Hello World!. In order to stop the process, press CTRL + C.

What just happened?

While it may seem like a bit of magic at first, it’s actually quite simple; Docker downloaded the image (nginx – the last word in the command) then ran a container with that image with two special parameters: It mounted our project directory ($(pwd) stands for current or present working directory) into /usr/share/nginx/html and also mapped guest OS’s port 80 to host’s port 8080 – this is what the colon character does, it maps ports and/or volumes from host to the container, so 8080:80 means use port 8080 on the host and map it to port 80 inside the container.

Note: In case you’re not familiar with the term mounting: It’s a process in which a computer plugs in a volume (a block of disk space) under a certain path in the container. You’re experiencing this on a daily basis whenever you plug in a USB key or insert a DVD – it gets automatically mounted by the operating system. This is also the reason why you have to safely remove USB keys: The OS needs to unmount the drive so you don’t end up with corrupt data! One important note here: If you mount a host’s directory into the container under a path that already exists there (and contains files), that directory will not be lost, just inaccessible while the directory is mounted.

And if we stick with the DVD analogy for a little bit longer because it can help us understand the main difference between a Docker image and a container: An image is a packaged, distributable and immutable filesystem (much like a DVD) while the container provides an isolated, bare-bones Linux environment in which that image can be ran (much like a DVD-ROM, the physical component of the computer that allows you to run whatever is on a DVD).

The immutable aspect of the images make them especially interesting (immutable means you can’t mutate or permanently change any of the files inside an image once it’s built) – that’s because they become completely disposable (one other term that you might come across is also ephmereal – same thing). Images can be rebuild from a recipe (called Dockerfile) over and over again so if you need to make any changes, you just rebuild them.

Images also follow another very useful SOLID principle: The open-closed principle. It states that a piece of code is closed to modification but open to extension. Similarly, images cannot be modified, but can be extended.

Because our basic Nginx image knows nothing about PHP (or anything else for that matter) it’s time for us to extend it and we do that with…

The Dockerfile

Simply put, a Dockerfile is a file that contains a set of instructions for Docker for it to be able to build an image. Think of it as a blueprint for our final image. The only mandatory instruction that you need to put into a Dockerfile is called a FROM instruction; It lets Docker know what image you’d like your own to be based off. The official repository holds images of any kind of Linux flavors or configurations you can imagine, and much like Github, they are named username/image-name:tag except for the official ones that don’t require any username (tag is always optional).

So, create an empty file called Dockerfile (no extension) next to your index.html and paste the following two lines into it (obviously change the MAINTAINER instruction to match your name and email):

FROM nginx:1.10-alpine

MAINTAINER Tomaz Zaman <[email protected]>Our custom image will be based on the official Nginx’s Alpine image. Alpine is a tiny Linux distribution, weighing only 5MB, and it’s becoming very popular in the Docker community because it provides a package installer out of the box called apk (similar to apt-get on Debian/Ubuntu) and because, well, it’s small, which is a good thing since you’ll eventually transfer images around (to production), and it makes sense to use small ones – Debian, for comparison, takes roughly ~200MB because it includes much more stuff that we don’t need. We just need Nginx and its dependencies.

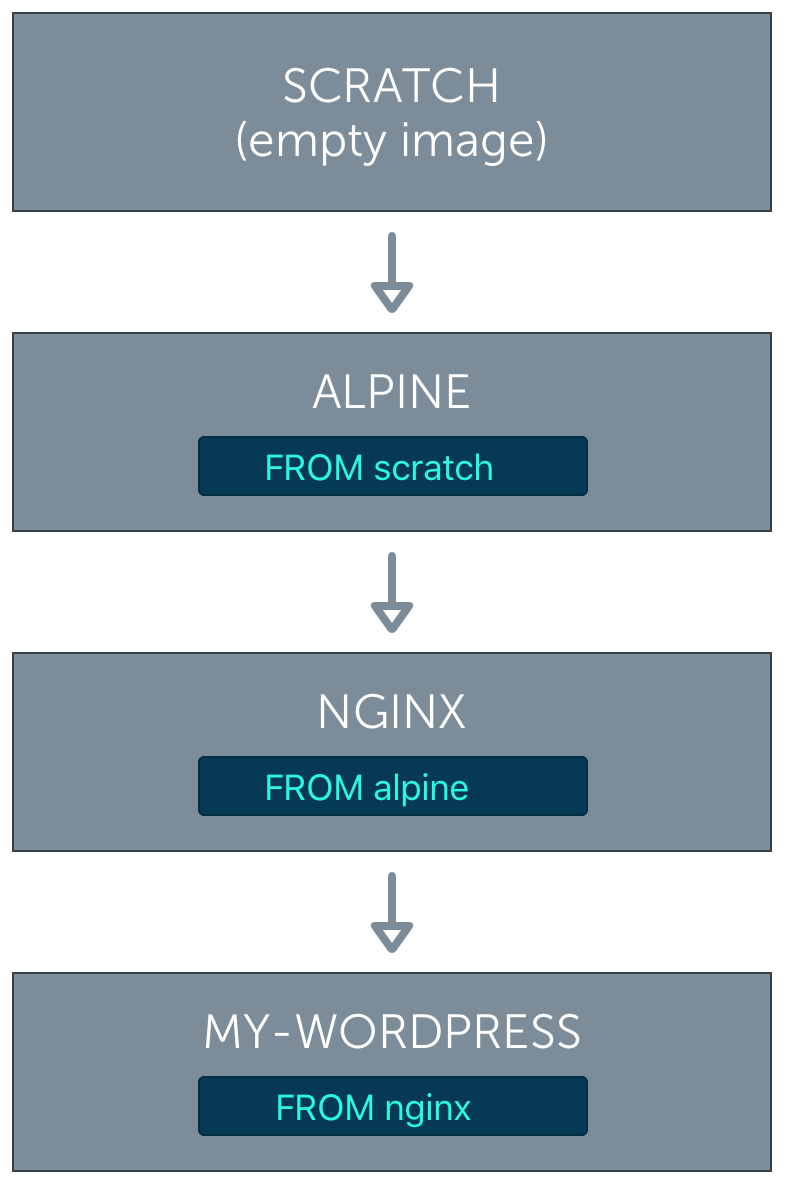

Here’s another significant benefit of using Docker: Docker images can be extended. And the resulting images can be extended too, as deep as you like, so if you go and investigate how deep the rabbit hole goes with the image inheritance, check nginx:1.10-alpine‘s Dockerfile, it’s FROM alpine:3.3. So let’s check Alpine’s Dockerfile. It inherits from an image called Scratch, which is basically a completely empty image, it doesn’t do anything, but it’s essentially the grandmother of all other images, which is why the word scratch is reserved in Docker. Here how the image inheritance looks in our case:

One Dockerfile instruction which we will not cover in this tutorial, but is worth mentioning is the CMD instruction. That instruction sets a default command to run when the container is started without any parameters, but we didn’t need to define it, because Nginx’s Dockerfile already does. If it didn’t do that, we would then have to run Nginx with a command like this (an example, no need to run it):

$ docker run -v $(pwd):/usr/share/nginx/html -p 8080:80 nginx nginx -g "daemon off;"

(In case you were wondering why the word nginx appears twice: the first instance is the name of the image, while the second – and everything forth – is the actual command we’re running inside the container)

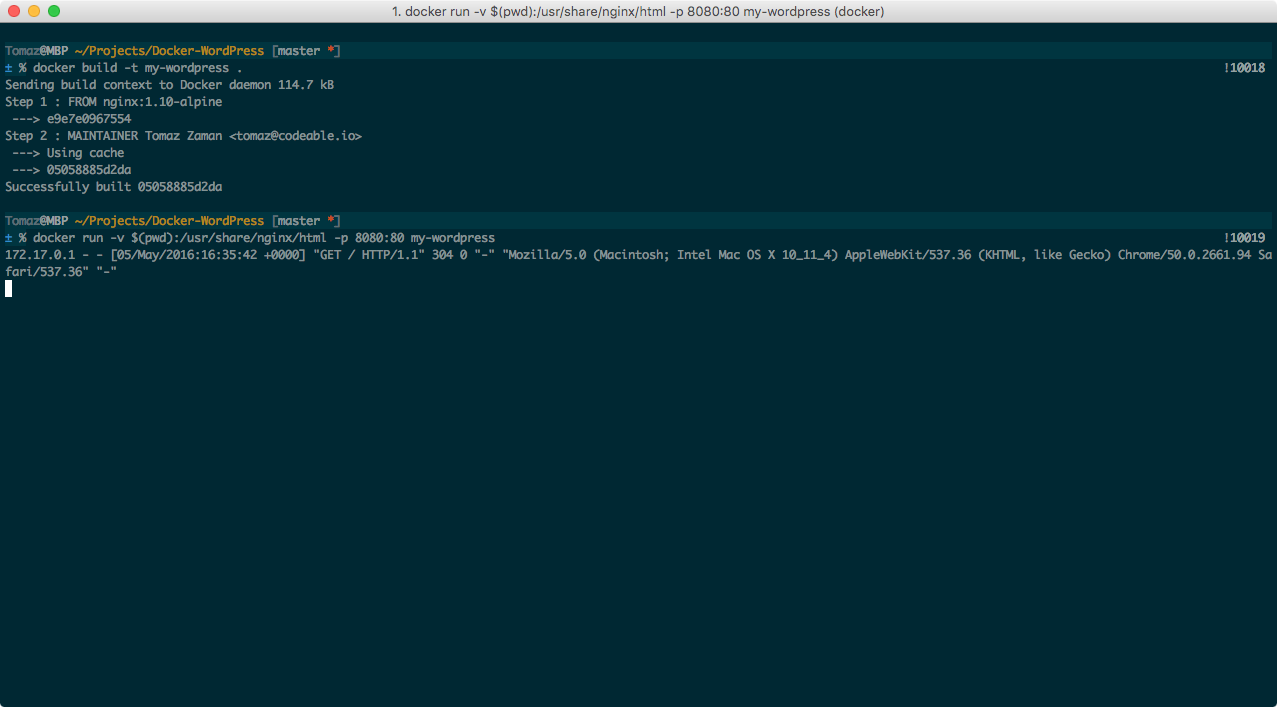

So in order to build our image, run:

$ docker build -t my-wordpress .

This command reads the Dockerfile in the current directory and tags the image to my-wordpress, because it’s easier to type that in than a random number of characters that Docker automatically generates if we don’t tag it. To test it out, run:

$ docker run -v $(pwd):/usr/share/nginx/html -p 8080:80 my-wordpress

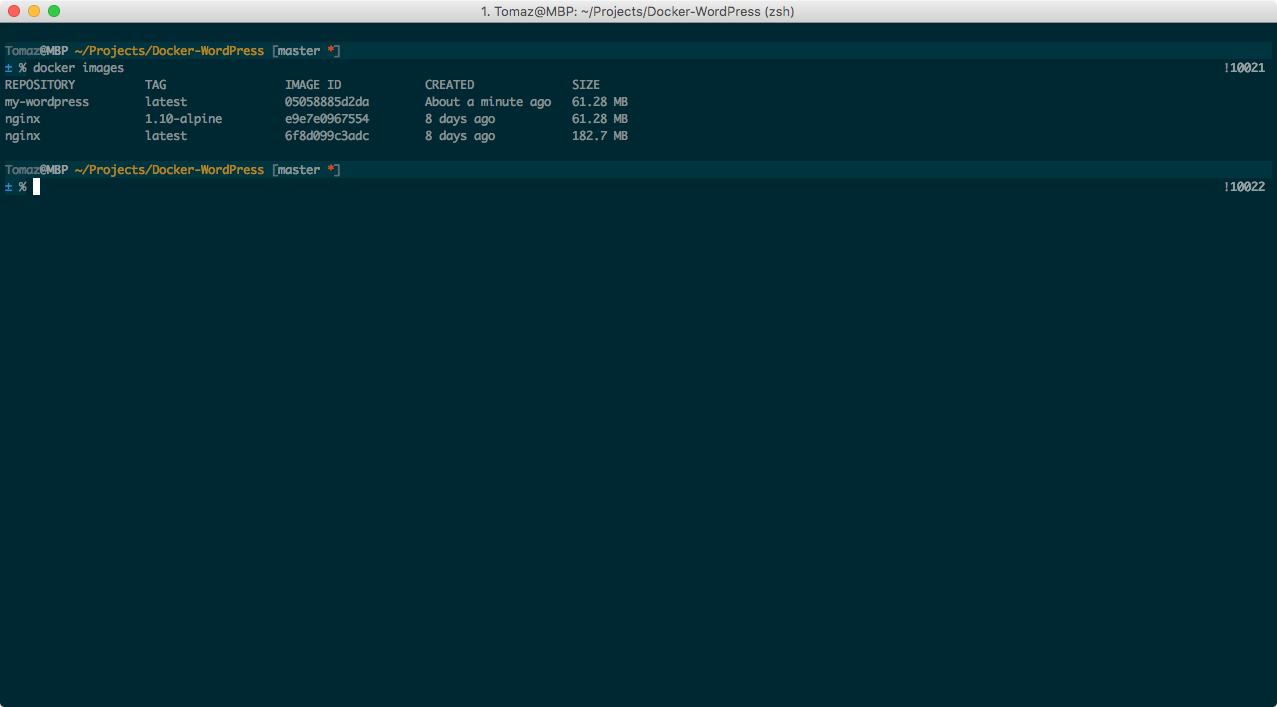

Not much has changed, but if you run $ docker images, you’ll be able to find both the default nginx image (which we ran in our first try), as well as our my-wordpress image. Look at their sizes: the default image is ~182MB, while our custom image is only ~61MB. You might think we could run the initial command (before we used Dockerfile) to use the Alpine version and indeed we could, I’m using this example to show you how you can get your Image sizes down by giving doing a bit of research on your options. In Nginx’s case, you have quite some.

If you try running the same command without the -v parameter ($ docker run -p 8080:80 my-wordpress) you’ll notice our index.html isn’t being server anymore – the default Nginx welcome page is, which is not very useful, so let’s add another instruction to our Dockerfile (at the bottom):

COPY index.html /usr/share/nginx/html

As you might imagine, this instruction copies our index.html into Nginx’s default directory (overwriting any existing files with the same name). Again, not a big deal, but we just made our image self sufficient. It became distributable.

The main difference between using volumes and copying files is that you usually mount volumes in development but will need files copied into the image for distribution (production). Mounting volumes in development saves a significant amount of time because you don’t have to rebuild the image for every change – Docker temporarily swaps the source directory on the image with the one you have locally.

Why is this awesome? Because we don’t need to install anything on our development machine, everything needed to run whatever we want to run is provided by images! This means you no longer need to install Nginx, PHP or MySQL on your development computer, which is a good thing, all these processes occupy valuable resources if we don’t turn them off – and we mostly don’t, they just run in the background.

So if you build the image again and run it image without mounting the volume, you will see our Hello World! again.

Configuring Nginx

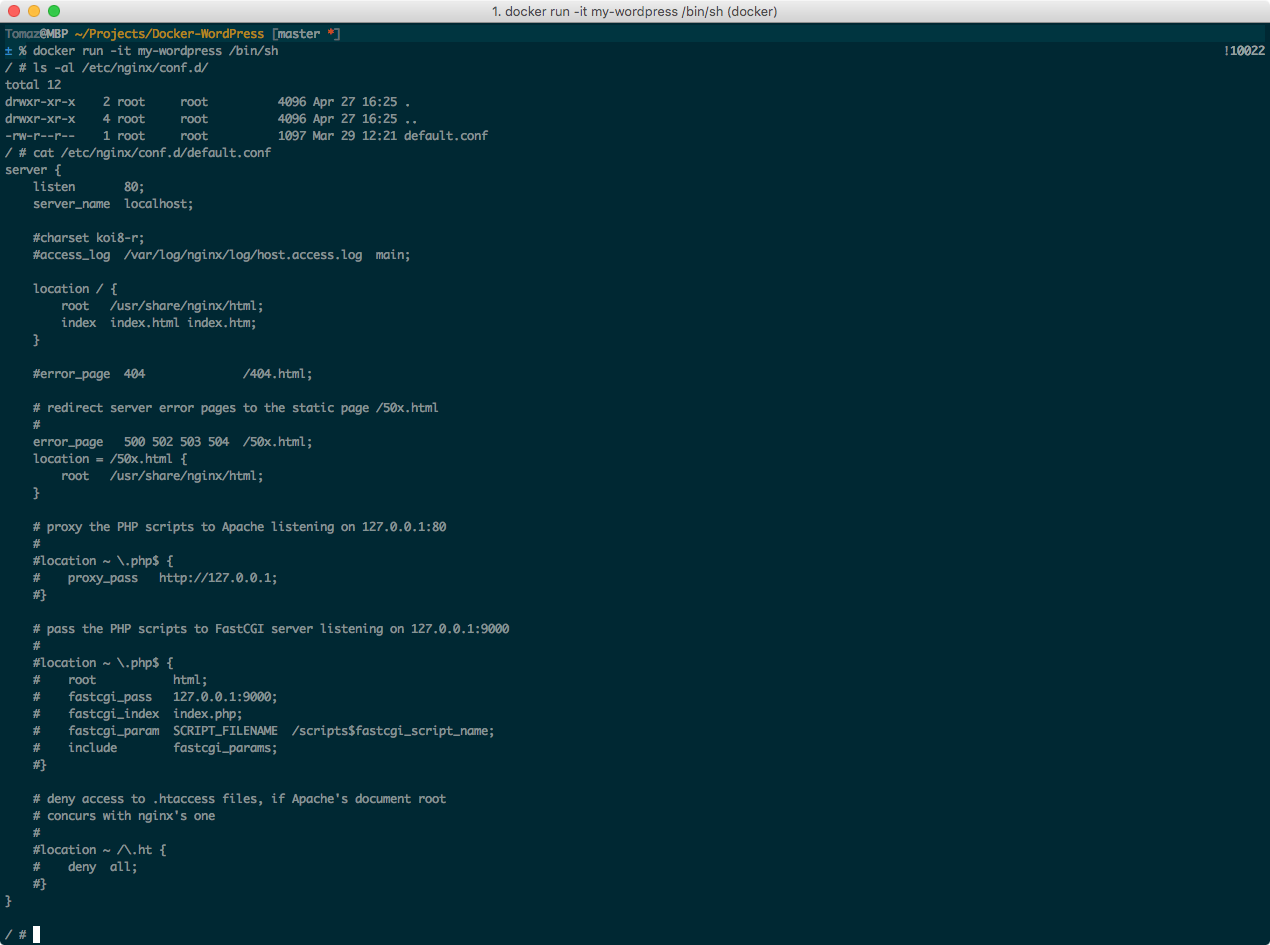

Our Nginx image is now up and running, but we’re not quite there yet – we need to modify it according to our needs. The most important part is, of course, the virtual host. In order to create one, we need to somehow override Nginx’s default configuration. Let’s connect to the container to find out where that is:

$ docker run -it my-wordpress /bin/sh

If you ever used SSH to connect to a server before, this command may seem quite similar, but in truth, there is no SSH involved here, we are just running the default container’s shell and attaching it to our command line (or terminal, if you’re on OSX) – the -it part makes it interactive.

Usually on Debian/Ubuntu based systems, Nginx configuration is set up in /etc/nginx and luckily this image uses the exact same structure, so if you run $ ls -al /etc/nginx/conf.d/ (inside the container), you will indeed see a single file in there, called default.conf, which we will overwrite shortly. But first, let’s investigate that there is, in fact, a virtual host in there:

$ cat /etc/nginx/conf.d/default.conf

Notice the server { ... } block in the output? That’s it. Let’s create our own version of this file now: Run $ exit to disconnect from the container and create a new file inside our project directory, named nginx.conf. Put the following snippet into it:

server {

server_name _;

listen 80 default_server;

root /var/www/html;

index index.php index.html;

access_log /dev/stdout;

error_log /dev/stdout info;

location / {

try_files $uri $uri/ /index.php?$args;

}

location ~ .php$ {

include fastcgi_params;

fastcgi_pass my-php:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

}

}Since index.php doesn’t exist yet, create it next to the index.html in the project directory and put <?php phpinfo(); ?> in it.

Then open the Dockerfile and modify it to look like this:

FROM nginx:1.9-alpine

MAINTAINER Tomaz Zaman

RUN mkdir -p /var/www/html

WORKDIR /var/www/html

COPY nginx.conf /etc/nginx/conf.d/default.conf

COPY . ./

We added a couple of instructions here: COPY (which we already know), RUN and WORKDIR. We could ignore the latter two for now, but they present an important concept of building images: Every project needs a root directory and the default ones aren’t necessarily the best (or don’t even exist), so first, we’re creating the /var/www/html (which will be the root of our WordPress install) and then setting the WORKDIR on it, which means all the subsequent instructions can just use the ./ to describe current working directory. An example of that is on the very last line of this Dockerfile: we are copying . into ./, which will result in the all the files from your host’s project directory being located at /var/www/html/ inside the image. WORKDIR essentially does exactly the same as running the cd command on Linux.

If you try building the image and running it, you’ll get an error because, by default, the Nginx image only serves the plain old HTML. In our configuration, though, we introduce a fastcgi block (location ~ .php$ { ... }) that points to another image (my-php), which doesn’t exist yet, so let’s create it now.

PHP-FPM image

I’ve seen Docker images that try to run both Nginx and PHP-FPM inside the same container, which is a big no-no for me. Remember the single responsibility principle we mentioned above? What we should have are two images: One for Nginx (where serving is the responsibility) and one for PHP-FPM (where parsing PHP is the responsibility).

Why is having two separate images important? Because as you’ll see soon, processes in containers don’t (or are not supposed to, at least) run in the background, they should be running as the main – and only – container process so that in case they fail, Docker (or the managing software) will know something happened and will at least attempt to restart the container. So ideally, one image => one foreground process. Granted, if we ran Apache instead of Nginx, this problem wouldn’t exist (because PHP comes as an Apache module, so there’s no separate process), but sometimes it pays off to overcomplicate things for learning purposes. Apart from that, many advanced developers prefer Nginx over Apache for numerous reasons – and so do I.

In order to build our PHP image, let’s first investigate our options in the official repository. There are many of them, but none come with built-in support for MySQL and many other WordPress dependencies, so we’re again forced to build our own. But it shouldn’t be a problem since it’s only a couple lines in the Dockerfile, right? Let’s do that; Create a new Dockerfile called Dockerfile.php-fpm and put the following code in it:

FROM php:7.0.6-fpm-alpine

MAINTAINER Tomaz Zaman

RUN docker-php-ext-install -j$(grep -c ^processor /proc/cpuinfo 2>/dev/null || 1)

iconv gd mbstring fileinfo curl xmlreader xmlwriter spl ftp mysqli

VOLUME /var/www/html

The RUN instruction here is a bit different since we need to install a couple of dependencies that are by default not present in the official image and we need them in order to run WordPress. Luckily, we have the command docker-php-ext-install at our disposal (read the docs on Docker Hub if you don’t understand where it comes from), and we call it with the -j parameter. This command looks quite scary at first, but is actually just to let the extension installer know how many processors it can use. This is important because some of the PHP extensions need to be compiled on the fly – a computationally expensive operation. So, let’s now build the PHP-FPM image:

$ docker build -t my-php --file Dockerfile.php-fpm .

You will see a lot going on the screen (compilation), but it shouldn’t take long. And luckily for us, Docker caches instructions in what are called layers – more on that in the next part of this series, so if you try to run the same command again, it’ll take only a second or so.

The last bit of the Dockerfile is the VOLUME instruction. This time, we are not copying files into the image, which may seem a bit confusing at first, so let me explain what is going on: The image has no source files on its own, it expects an external volume to be mounted onto it during runtime and here lies another benefit of Docker: We can share volumes among containers, which means we can plug some arbitrary host’s directory into any number of containers at the same time and they’ll all have access to it. This is important because not doing so would lead to inconsistencies between files on Nginx and PHP-FPM and we’d like to avoid that. So why not just share the same data between them?

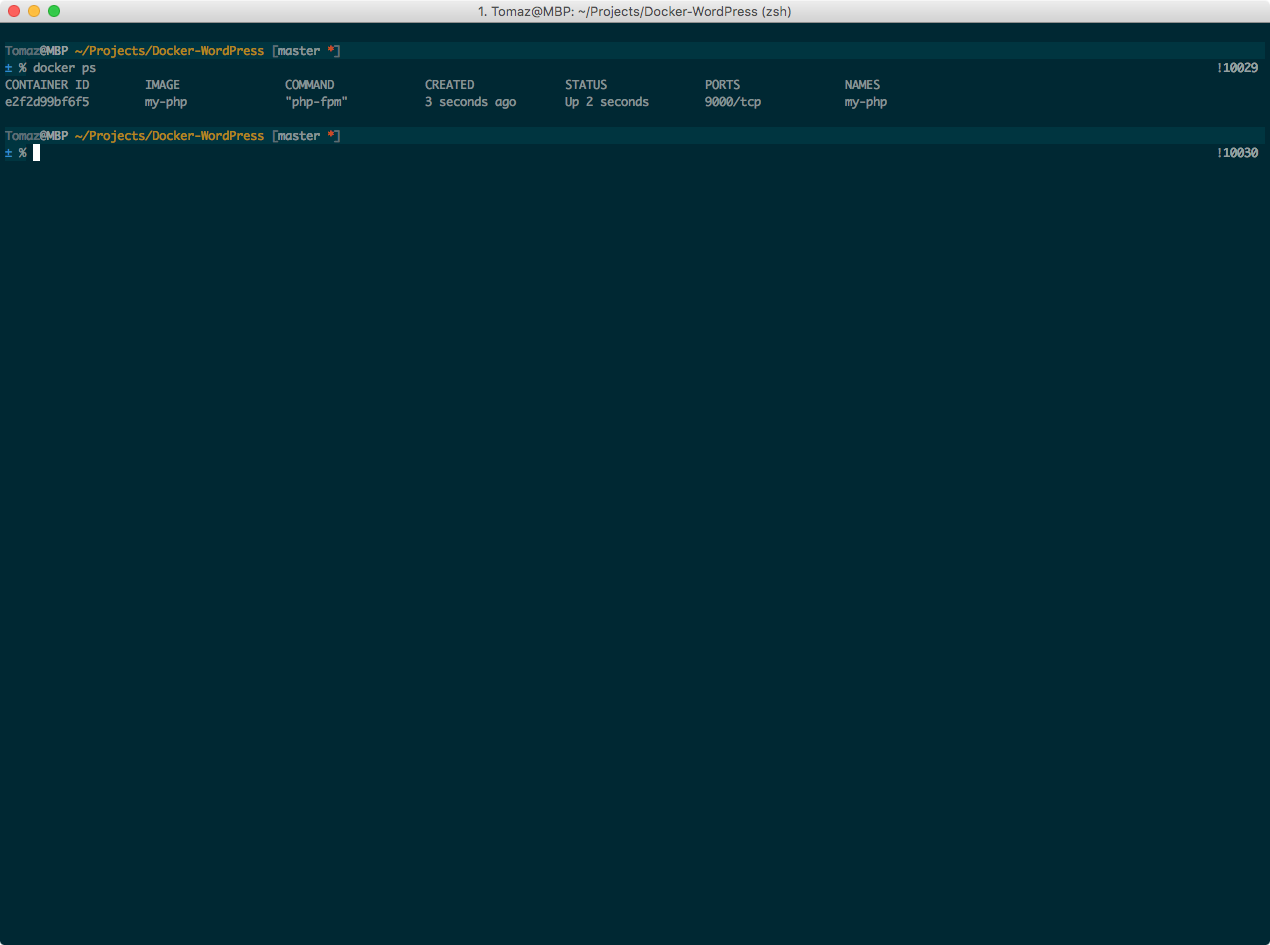

With both our images ready, it’s time to test drive the connection between them. First, bring up the PHP-FPM image (because it’s a dependency for our Nginx configuration) and run it in the background (which is what the -d stands for):

$ docker run -v $(pwd):/var/www/html --name my-php -d my-php

Because this command doesn’t return anything, run $ docker ps to make sure that the container is running. Note the CONTAINER ID, because you’ll need to stop it by entering $ docker stop f0b621891979 (change the last string with your ID). And indeed, it’s running:

Also, note that we gave it a name so that we will be able to link it to the Nginx image, which we bring up like this:

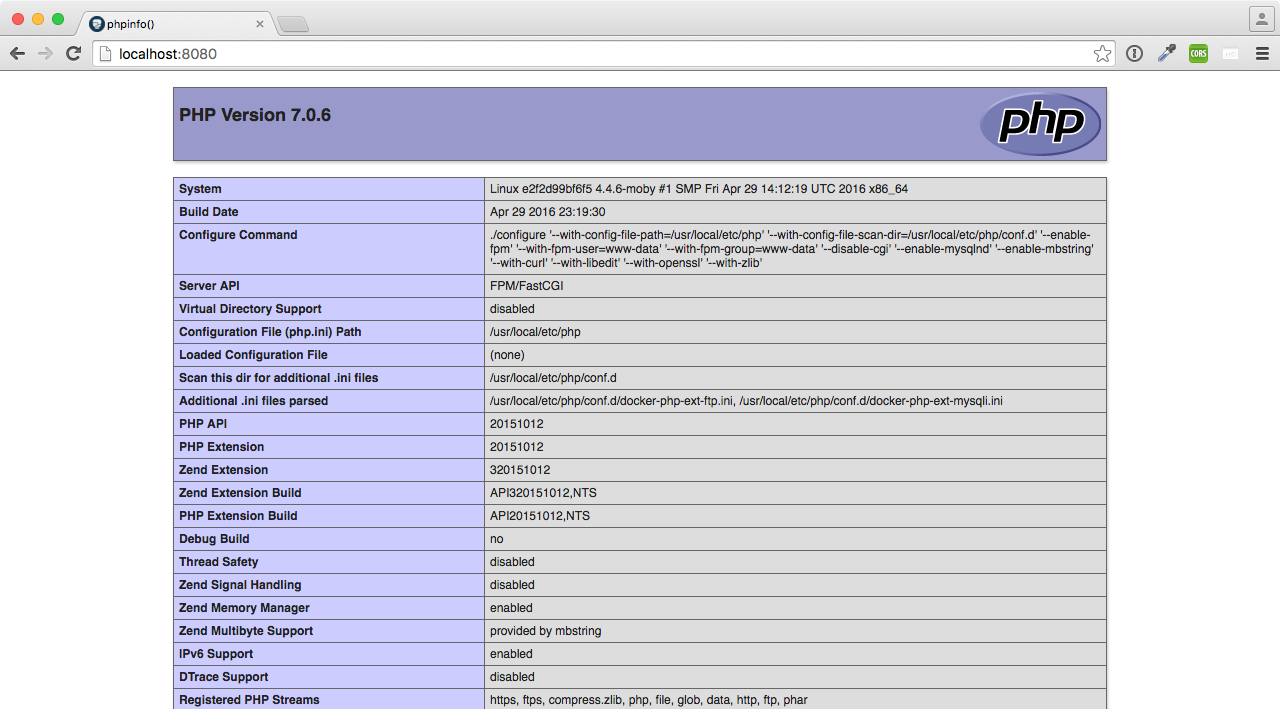

$ docker run -it --link my-php:my-php -p 8080:80 my-wordpress

Switch back to the browser and visit the same address you used to verify Nginx is working, and lo-and-behold!

The next logical step from here would be to set up our MySQL image, but before we do that, let’s visit another tool that will make our lives a bit easier; As you might notice by now, Docker commands can become very verbose, and, as usually, there’s ~~an app~~ a tool for that!

Composing commands

Before we continue, let’s check some of our commands we had to run in order to build our images and run our containers. More importantly, let’s investigate which parts are being repeated over and over:

- Both images require an external volume be mounted under

/var/www/html - Both images expose certain ports (80 for Nginx, 9000 for PHP-FPM)

- Both images build from a custom Dockerfile

- Nginx relies on PHP-FPM to be present (as

my-phphostname)

To make our lives easier, Docker ships with a tool that allows us to put all this configuration in a file in order to make the commands shorter (and less of them). The tool is called docker-compose (used to be called fig back in the early days) and requires a file named docker-compose.yml to be present in your project’s directory, so create one and put this code in:

version: '2'

services:

my-nginx:

build: .

volumes:

- .:/var/www/html

ports:

- "8080:80"

links:

- my-php

my-php:

build:

context: .

dockerfile: Dockerfile.php-fpm

volumes:

- .:/var/www/html

ports:

- "9000:9000"

Instead of always tagging and naming images on the fly, docker-compose extracts all the necessary information from the configuration file. In it, we define two services, one called my-nginx (our Nginx image) and the other my-php.

The my-nginx image uses the current directory for the build path (hence the .) because docker-compose by default expects a Dockerfile in there. Furthermore, we also mount the current project directory on the host to /var/www/html in the container (via volumes key) and we also map the ports, just as with our -p command, which will not be necessary anymore. Lastly, we link it to the my-php image – Docker makes an internal network for that behind the scenes.

As for the my-php image, the build key is slightly different, because its Dockerfile isn’t using the standard name (see here for more details). Like our my-nginx image, we also mount the volume and map the ports.

Now here comes the fun part! In order to build images using the docker-compose, just run (do it twice to see caching in effect):

$ docker-compose build

Once built, we only need to run one more command to bring up the containers:

$ docker-compose up

Yes! That’s it – no more lengthy docker commands, docker-compose all the way!

What about MySQL?

One very important part of building images with Docker that we haven’t talked about yet are environment variables. We will discuss them a bit more in depth in the next part of our tutorial, but for a quick introduction, we will build our MySQL image using those.

Unlike our PHP-FPM image, there’s no need to build a custom MySQL image (although you certainly can, if you want to), the official one already ships with everything we need!

Because of that, we will skip the lengthy and cumbersome docker command and add the configuration straight to the docker-compose.yml file. Add the following lines at the bottom (be careful it’s indented like other services):

my-mysql:

image: mariadb:5.5

volumes:

- /var/lib/mysql

environment:

MYSQL_ROOT_PASSWORD: wp

MYSQL_DATABASE: wp

MYSQL_USER: wp

MYSQL_PASSWORD: wp

Also don’t forget to link it from the my-php image, so add this at the end of it:

my-php:

(existing configuration)

links:

- my-mysql

If you try running $ docker-compose build, you’ll notice that the MySQL image is not being built – that’s because it’s already built and stored in the official repository, we just need to download it, which will happen automatically when you run it, so go ahead:

$ docker-compose up

To make sure it’s actually working, switch to your code editor (but don’t stop the docker-compose) and create a new file called in your project’s root directory named db-test.php and put the following content in:

<?php

$dbuser = 'wp';

$dbpass = 'wp';

$dbhost = 'my-mysql';

$connect = mysqli_connect($dbhost, $dbuser, $dbpass) or die("Unable to Connect to '$dbhost'");

echo "Connected to DB!";

Switch back to your browser and manually enter this file into the address bar, so it looks something like this: http://localhost:8000/db-test.php. If you see Connected to DB!, tap yourself on the back for a job well done!

So why is it working?

Because the official MariaDB image is built in a way that accepts environment variables as the most common configuration options. That’s why we added the environment key to our docker-compose configuration and set these options there. Having environment variables as configuration options become very useful once we start scaling our applications (and WordPress is no different) by introducing new servers horizontally (many servers serving the same code) – it adheres to 12 factor apps.

The last bit to point out is the volumes key. Here, we are not mapping it to any of the host’s directories but rather just define a volume, which Docker will create (and know about) behind the scenes. This is useful because as we learned previously, images are immutable, so if we didn’t do that, all the MySQL data would be lost when we stop the container. This way, we make this volume persistent between runs and our data safe.

Conclusion

Let’s recap what we learned in this tutorial:

- Dockerfile is a blueprint for creating custom Docker images (or rather extending existing ones)

- Docker images are disposable, distributable and immutable filesystems

- Docker Containers provide basic Linux environment in which images can be ran

- Each project can define own Docker images based on the requirements

- With Docker, we don’t need to install anything locally (apart from Docker itself)

- For many purposes, we can just use existing images from Docker Hub

- Docker works natively on Linux with Windows and Mac support in the pipeline

- We can build images for anything, but each should have a single responsibility, usually in a form of a process running in the foreground

- docker-compose provides a convenient way to save us some typing

- Mapping provides a convenient way to connect host’s resources to the container

- Mounting provides a convenient way to plug in host’s directories in the container

I realize all of this can be quite daunting at first, but I strongly believe that Docker is the way to the future, not only because of the many benefits outlined throughout this tutorial but because it will force us to do things a certain way. You decide whether that way is good or bad but anyway that the majority of developers follow becomes a strong foundation for the future. WordPress taught us that, after all.

Speaking of WordPress, we didn’t deal with it in this part because it’s already long enough and will require some time to sink in, so I’ll cover that in the next post, which is scheduled in the coming weeks. Be sure to subscribe!

I’d like to thank Mario Peshev for a technical review of this article.

Dream It

Dream It